|

|

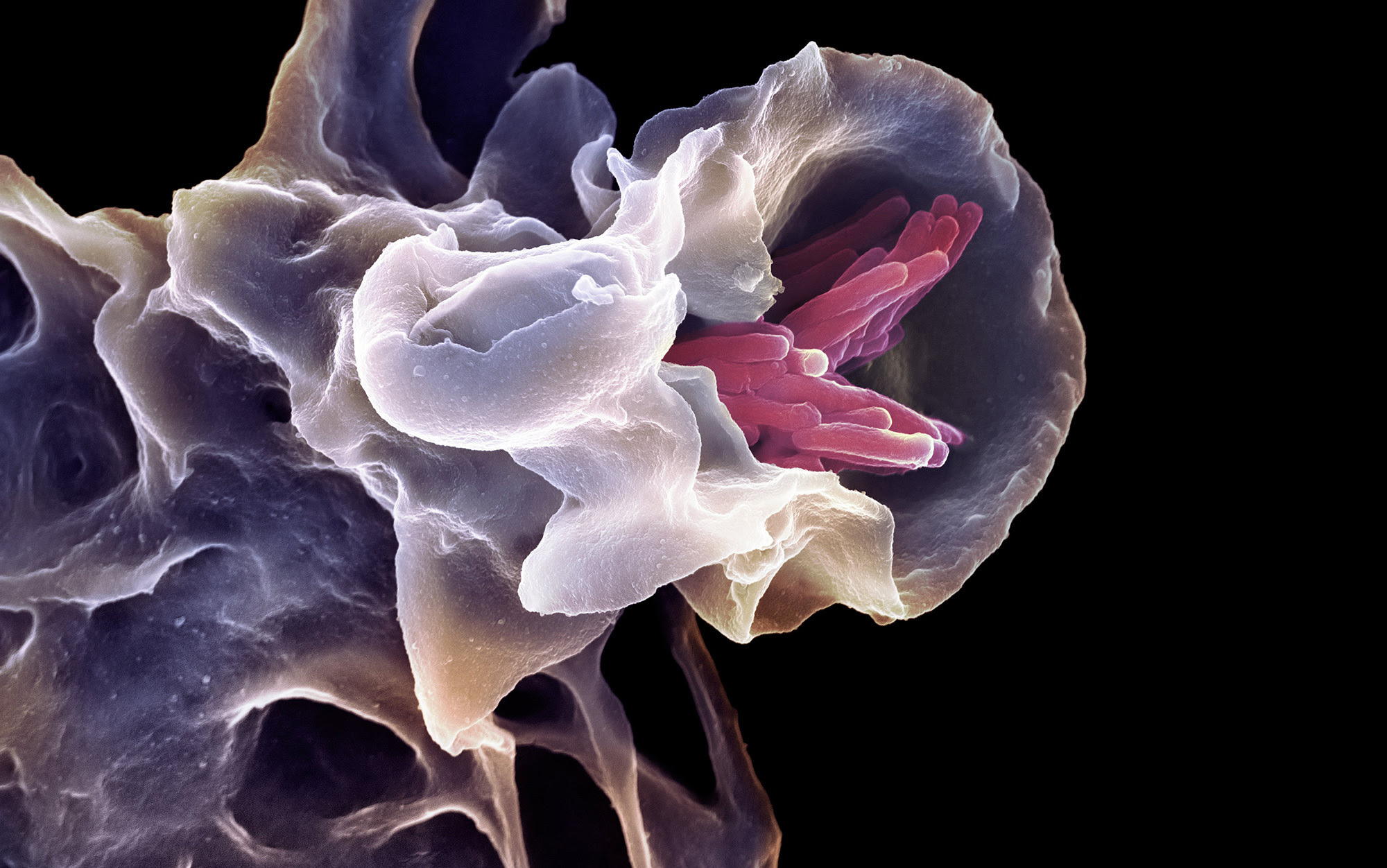

Life with purposeBiologists balk at any talk of ‘goals’ or ‘intentions’ – but a bold new research agenda has put agency back on the table Animal immune systems depend on white blood cells called macrophages that devour and engulf invaders. The cells pursue with determination and gusto: under a microscope you can watch a blob-like macrophage chase a bacterium across the slide, switching course this way and that as its prey tries to escape through an obstacle course of red blood cells, before it finally catches the rogue microbe and gobbles it up. But hang on: isn’t this an absurdly anthropomorphic way of describing a biological process? Single cells don’t have minds of their own – so surely they don’t have goals, determination, gusto? When we attribute aims and purposes to these primitive organisms, aren’t we just succumbing to an illusion? Indeed, you might suspect this is a real-life version of a classic psychology experiment from 1944, which revealed the human impulse to attribute goals and narratives to what we see. When Fritz Heider and Marianne Simmel showed people a crudely animated movie featuring a circle and two triangles, most viewers constructed a melodramatic tale of pursuit and rescue – even though they were just observing abstract geometric shapes moving about in space.  Yet is our sense that the macrophage has goals and purpose really just a narrative projection? After all, we can’t meaningfully describe what a macrophage even is without referring to its purpose: it exists precisely to conduct this kind of ‘seek and destroy’ manoeuvre. One of biology’s most enduring dilemmas is how it dances around the issue at the core of such a description: agency, the ability of living entities to alter their environment (and themselves) with purpose to suit an agenda. Typically, discussions of goals and purposes in biology get respectably neutered with scare quotes: cells and bacteria aren’t really ‘trying’ to do anything, just as organisms don’t evolve ‘in order to’ achieve anything (such as running faster to improve their chances of survival). In the end, it’s all meant to boil down to genes and molecules, chemistry and physics – events unfolding with no aim or design, but that trick our narrative-obsessed minds into perceiving these things. Yet, on the contrary, we now have growing reasons to suspect that agency is a genuine natural phenomenon. Biology could stop being so coy about it if only we had a proper theory of how it arises. Unfortunately, no such thing currently exists, but there’s increasing optimism that a theory of agency can be found – and, moreover, that it’s not necessarily unique to living organisms. A grasp of just what it is that enables an entity to act as an autonomous agent, altering its behaviour and environment to achieve certain ends, should help reconcile biology to the troublesome notions of purpose and function. A bottom-up theory of agency could help us interpret what we see in life, from cells to societies – as well as in some of our ‘smart’ machines and technologies. We’re starting to wonder whether artificial intelligence systems might themselves develop agency. But how would we know, if we can’t say what agency entails? Only if we can ‘derive complex behaviours from simple first principles’, says the physicist Susanne Still of the University of Hawai‘i at Mānoa, can we claim to understand what it takes to be an agent. So far, she admits that the problem remains unsolved. Here, though, is a sketch of what a solution might look like. One of the most determined efforts to insulate biology from the apparent teleology that life awakens in matter came from the biologist Ernst Mayr, in his book What Makes Biology Unique? (2004). He acknowledged that biology can’t avoid speaking in terms of function. Our eyes evolved so that we can better navigate our environment; the function of the lactase enzyme is to break down lactose sugars; and so on. Goal-directed actions seem almost axiomatically biological: molecular and cell biologists, neuroscientists and geneticists can barely do their work without employing this mode of thinking. Yet they’re often quick to insist that it’s just a figure of speech – an interpretive stance, and nothing more. Organisms do what they do only because they’re genetically programmed via natural selection to do so. Even in the human sciences, there’s been resistance to notions of true agency. Under the doctrine of radical behaviourism – initiated by the American psychologist B F Skinner in the 1930s, and which was a prominent strand in psychology until the 1980s – animal behaviour was just learned (‘conditioned’) actions, trained into initially blank-slate minds. Even today, there’s a widespread reluctance to accept that other animals’ cognitive processes add up to genuinely autonomous choices. A popular narrative now casts all living entities as ‘machines’ built by genes, as Richard Dawkins called them. For Mayr, biology was unique among the sciences precisely because its objects of study possessed a program that encoded apparent purpose, design and agency into what they do. On this view, agency doesn’t actually manifest in the moment of action, but is a phantom evoked by our genetic and evolutionary history. But this framing doesn’t explain agency; it simply tries to explain it away. Individual genes have no agency, so agency can’t arise in any obvious way from just gathering a sufficient number of them together. Pinning agency to the genome doesn’t tell us what agency is or what makes it manifest. No one should suppose that macrophages are acting in the rich cognitive environment available to a wolf Besides, genes don’t fully specify behavioural outcomes in any given situation – not just in humans, but even in very simple organisms. Genes can imbue dispositions or tendencies, but it’s often impossible to predict precisely what an organism will do even when it’s mapped down to the last cell and gene. If all behaviour were hardwired, individual organisms could never find creative solutions to novel problems, such as the ability of New Caledonian crows to shape and use improvised tools to obtain food. This reveals a crucial dimension of agency: the ability to make choices in response to new and unforeseen circumstances. When a hare is being pursued by a wolf, there’s no meaningful way to predict how it will dart and switch this way and that, nor whether its gambits will suffice to elude the predator, who responds accordingly. Both hare and wolf are exercising their agency. No one should suppose that macrophages are acting in the rich cognitive environment available to a wolf, but sometimes it’s hard to decide where the distinctions lie. Confusion can arise from the common assumption that complex agential behaviour requires a concomitantly complex mind. In the ordinarily sedate waters of plant biology, for example, a storm is currently raging over whether or not plants have sentience and consciousness. Some things that plants do – such as apparently selecting a direction of growth based on past experience – can look like purposeful and even ‘mindful’ action, especially as they can involve electrical signals reminiscent of those produced by neurons. But if we break down agency into its constituents, we can see how it might arise even in the absence of a mind that ‘thinks’, at least in the traditional sense. Agency stems from two ingredients: first, an ability to produce different responses to identical (or equivalent) stimuli, and second, to select between them in a goal-directed way. Neither of these capacities is unique to humans, nor to brains in general. The first ingredient, the ability to vary a response to a given stimulus, is the easiest to procure. It requires mere behavioural randomness, like a coin flip. Such unpredictability makes evolutionary sense, since if an organism always reacted to a stimulus in the same way, it could become a sitting duck for a predator. This does actually happen in the natural world sometimes: a certain species of aquatic snake triggers a predictable escape manoeuvre in fish that takes the fish directly into the snake’s jaws. An organism that reacts differently in seemingly identical situations stands a better chance of outwitting predators. Cockroaches, for example, run away when they detect air movements, moving in more or less the opposite direction to the airflow – but at a seemingly random angle. Fruit flies show some random variation in their turning movements when they fly, even in the absence of any stimulus; presumably that’s because it’s useful (in foraging for food, say) to broaden your options without being dependent on some signal to do so. Such unpredictability is even enshrined in an aphorism known as the Harvard Law of Animal Behaviour: ‘Under carefully controlled experimental circumstances, the animal behaves as it damned well pleases.’ One type of single-celled aquatic organism called a ciliate – a microscopic, trumpet-shaped blob that attaches to surfaces, anemone-like – offers a striking example of randomness in a simple, brainless organism. When researchers fired a jet of tiny plastic beads at it, mimicking the encroachment of a predator, it sometimes reacted by contracting, and sometimes by detaching and floating away, with unpredictable 50:50 odds. Evidently, you don’t even need so much as a nervous system to get random. ‘Free’ choice is typically effortful, involving the conscious contemplation of imagined future scenarios Generating behavioural alternatives isn’t the same as agency, but it’s a necessary condition. It’s in the selection from this range of choices that true agency consists. This selection is goal-motivated: an organism does this and not that because it figures this would make it more likely to attain the desired outcome. In humans, this selection process often entails some hefty cogitation by dedicated neural circuitry, notes the cognitive scientist Thomas Hills of the University of Warwick in the United Kingdom. For us, ‘free’ choice is typically effortful, involving the conscious contemplation of imagined future scenarios based on past experience. It demands an ability to construct an image of the self within our environment: a ‘rich cognitive representation’ that allows those scenarios to be imagined in sufficient detail to reliably predict outcomes. It also requires an ability to maintain a focus on our goal in the face of distractions and disturbances. None of this need be uniquely human, Hills says – we know that other animals ‘selectively replay patterns of neural activation associated with past experience’, for example, as if preparing for similar encounters in the future. But it might, he says, demand a specific ‘architecture’ of mind. However, if we’re going to ascribe agency to cells and ciliates, we can’t make it depend on cognitive resources this elaborate. But these simple biological entities aren’t alone in showing an ability to choose among behavioural alternatives; such an ability appears to be present in systems that have no explicit biology in them at all. To get to the nub of agency, we need to leave biology behind. Instead, we can look at agency through the prism of the physics of information, and reflect on the role that information processing plays in bringing about change. This isn’t a new approach. In the mid-19th century, physical scientists figured that all change in the Universe is governed by the second law of thermodynamics, which states that the change must lead to an increase in entropy – loosely speaking, in the overall amount of disorder among particles. It’s because of the second law that heat moves spontaneously from hot regions to colder ones. In 1867, the physicist James Clerk Maxwell dreamt up a loophole in the law. He imagined an ingenious microscopic being, later dubbed Maxwell’s demon, that has a box full of gas particles, divided into two compartments. The demon operates a mechanism that sifts ‘hot’ from ‘cold’ particles by letting them pass selectively through a trapdoor in the dividing wall between the chambers. As a result, the hot (faster-moving) particles would gather on one side, and the cold (slower) in the other. Normally, the second law dictates the opposite: that heat spreads and dissipates until the gas has a uniform temperature throughout. The demon in the thought experiment conquers this thermodynamic decree by having access to microscopic information about the particles’ motion – information we could never hope to perceive. Tellingly, the demon exhibits agency in making use of this information. It has a goal, to attain which it chooses to open the trapdoor for some particles and not for others – depending not just on their energy, but on which compartment they’re in. To try to understand if Maxwell’s hypothetical demon really undermined the second law, later scientists replaced the ill-defined and capricious demon with a hypothetical mechanism. You could manage it with a fairly simple electronically controlled trapdoor, say, coupled with devices that can sense the particles’ speed or energy. This amounts to putting in purpose and agency by intentional design: specifying how the information gathered from the environment will be used to decide on the course of action (to open the trapdoor or not). The demon then becomes an ‘information engine’: a mechanism that harnesses information to do its work (here, building up a reservoir of hot gas). This missing link could release biology from having to pretend that agency is just a convenient fiction It was by automating the demon in this way that it was finally tamed. In the 1960s, the physicist Rolf Landauer showed that the demon-machine would ultimately have to reimburse the entropy losses it racks up as it segregates hot and cold molecules. To use the information it gathers about particles’ motions, the device has to first record them in a memory of some kind. But any real memory has a finite capacity – and a box of gas contains a lot of molecules. So the memory has to be wiped every so often to make room for new information. And that erasure, Landauer showed, has an unavoidable entropic overhead. All the entropy lost by separating hot from cold is recouped by resetting the memory. Landauer’s analysis revealed a deep link between thermodynamics and information. Information – more specifically, the capacity to store information about the environment – is a kind of fuel that must be constantly replenished. What the demon achieves is precisely the characteristic of living organisms identified in the 1940s by the physicist Erwin Schrödinger: it creates and sustains order in the face of the tendency of the second law to erode it. Though he wasn’t the first to do so, Schrödinger pointed out that organisms too must ultimately pay for their internal organisation and order by increasing the entropy of their surroundings – that’s why our bodies generate heat. His seminal little book What Is Life? (1944), based on a series of lectures, had a big impact on several scientists who turned from physics to consider how life operates – including Francis Crick, who, with James Watson, discovered the structure of DNA in 1953. Schrödinger believed that the apparent agency of life was sustained entirely by encoded instructions that specify its responses to the environment. And it’s certainly true that the mechanical readout of instructions shaped by evolution and stored in some molecular form – which Schrödinger called an ‘aperiodic crystal’, and which Crick and Watson identified as DNA – explains a great deal about how living things work. But that view leaves no room for the contingent, contextual and versatile operation of real agency, whereby the agent has a goal but no prescribed route to attaining it. It’s one of the great missed opportunities in science that Schrödinger failed to connect his view of life as organisation maintained against entropic decline with the work his fellow physicists were doing on Maxwell’s demon. This is the missing link that could release biology from having to pretend that agency is just a convenient fiction, a mirage of evolution. ‘What is needed to fully understand biological agency,’ say the complex-systems theorist Stuart Kauffman and the philosopher Philip Clayton, ‘has not yet been formulated: an adequate theory of organisation.’ This link between organisation, information and agency is finally starting to appear, as scientists now explore the fertile intersection of information theory, thermodynamics and life. In 2012, Susanne Still, working with Gavin Crooks of the Lawrence Berkeley National Laboratory in California and others, showed why it’s vital for a goal-directed entity such as a cell, an animal or even a tiny demon to have a memory. With a memory, any agent can store a representation of the environment that it can then draw upon to make predictions about the future, enabling it to anticipate, prepare and make the best possible use of its energy – that is, to operate efficiently. Energy efficiency is clearly an important goal in evolutionary biology: an organism that wastes less energy can devote less time to acquiring it (by scavenging, say). But energy efficiency is crucial in technology too. It was a desire to improve the efficiency of machines that drove the discovery of thermodynamics in the early 19th century. These principles revealed that, while it’s impossible to do any useful work without a cost in entropy, the more efficient you are, the less of your energy is dissipated as useless heat. Maxwell’s demon fails to evade this entropic tax because its memory is finite and eventually has to be wiped. Even so, the demon is aware of events at the molecular level that no living organism can hope to access, and this is what permits it to take control of what looks to us like a random mess of particle motions. But how can your goal be best achieved – how can you maximise your agency – when you’re not so all-seeing? That’s where the value of prediction and anticipation comes in. ‘Most real systems, especially biological ones, have “perception filters”,’ says Still, ‘which means they can’t access the underlying true state of reality, but only can measure some aspects thereof. They are forced to operate on partial knowledge and need to make inferences.’ By considering how real-world ‘information-engines’ might cope with the fact that the world can’t be observed in its entirety, Still, Crooks and their colleagues found that efficiency depends on an ability to focus only on information that’s useful for predicting what the environment is going to be like moments later, and filtering out the rest. In other words, it’s a matter of identifying and storing meaningful information: that which is useful to attaining your goal. The more ‘useless’ information the agent stores in its memory, the researchers showed, the less efficient its actions. In short, efficient agents are discerning ones. There are still wrinkles in this picture. In general, the environment isn’t a static thing, but something that the agent itself affects. So it’s not enough to simply learn about the environment as it is, because, says Still, ‘the agent changes the process to be learned about’. That creates a much trickier scenario. The agent might then be faced with the choice of adapting to circumstances or acting to alter those circumstances: sometimes it might be better to go around an obstacle, and sometimes to try to tunnel through it. It seems that even ‘minimal agents’ can find inventive strategies, without any real cognition at all What’s more, taking action is effective only when the environment can accommodate that strategy. There’s little point in trying to make a change faster than your surroundings can respond to those efforts, say; if you shake a salt cellar too fast (at ultrasonic frequencies) nothing will come out. As Still puts it: ‘There might be a sort of “impedance match” between certain action sequences and the dynamics of the environment.’ And in real life, agents might have to find good compromises between several conflicting goals. Still says she’s now actively working on how information-engine agents can cope with these problems. How, though, does an agent ever find the way to achieve its goal, if it doesn’t come preprogrammed for every eventuality it will encounter? For humans, that often tends to come from a mixture of deliberation, experience and instinct: heavyweight cogitation, in other words. Yet it seems that even ‘minimal agents’ can find inventive strategies, without any real cognition at all. In 2013, the computer scientists Alex Wissner-Gross at Harvard University and Cameron Freer, now at the Massachusetts Institute of Technology, showed that a simple optimisation rule can generate remarkably lifelike behaviour in simple objects devoid of biological content: for example, inducing them to collaborate to achieve a task or apparently to use other objects as tools. Wissner-Gross and Freer carried out computer simulations of disks that moved around in a two-dimensional space, a little like cells or bacteria swimming on a microscope slide. The disk could follow any path through the space, but subject to a simple overarching rule: the disk’s movements and interactions had to maximise the entropy it generated over a specified window of time. Crudely speaking, this tended to entail keeping open the largest number of options for how the object might move – for example, it might elect to stay in open areas and avoid getting trapped in confined spaces. This requirement acted like a force – what Wissner-Gross and Freer dubbed an ‘entropic force’ – that guided the object’s movements. Oddly, the resulting behaviours looked like intelligent choices, made to secure a goal. In one example, a large disk ‘used’ a small disk to extract a second small disk from a narrow tube – a process that looked remarkably like tool use. In another example, two disks in separate compartments synchronised their movements to manipulate a larger disk into a position where they could interact with it – behaviour that looked like social cooperation. The computer simulations of these scenarios were reminiscent of Heider and Simmel’s animations. But the comparison is misleading, because that 1944 experiment really did embody hidden intentions – the movements were designed by humans precisely to elicit a sense of the objects’ agency in the eyes of the observer. Wissner-Gross and Freer’s model, by contrast, just stipulated a mathematical prescription for how the system’s total entropy should change over time. The agents were, in effect, using their ability to project their actions into the future – to work out the entropic consequences – to make their ‘choices’. In the real world, objects that conduct such a computation autonomously would need to have some way of internally representing the possible trajectories in their environment – that is, a kind of working memory. They’d also need inherent computational resources to figure out the consequences. But Wissner-Gross and Freer’s model was never supposed to present a biologically realistic scenario. Rather, the key point was that the agency required to solve apparently complex problems can emerge from a strikingly simple entropic rule. The claim isn’t that biological agency really arises in this way, but that simple physical principles, rather than cognitive complexity, can suffice to generate complex, goal-directed behaviour. Here, then, is a possible story we can tell about how genuine biological agency arises, without recourse to mysticism. Evolution creates and reinforces goals – energy-efficiency, say – but doesn’t specify the way to attain them. Rather, an organism selected for efficiency will evolve a memory to store and represent aspects of its environment that are salient to that end. That’s what creates the raw material for agency. Meanwhile, an organism selected to avoid predation or to forage efficiently will evolve an ability to generate alternative courses of action in response to essentially identical stimuli: to create options and flexibility. At first, the choice among them might be random. But organisms with memories that permit ‘contemplation’ of alternative actions, based on their internal representations of the environment, could make more effective choices. Brains aren’t essential for that (though they can help). There, in a nutshell, is agency. It might not have happened this way, of course. But such a picture has the virtue of breaking down the complex faculty of agency into simpler processes that don’t depend on highly specific (or even ‘organic’) hardware. It also reflects the way that complex cognition often seems to have evolved from a mash-up of capabilities that arose for other purposes. At the very least, the latest research suggests that it’s wrong to regard agency as just a curious byproduct of blind evolutionary forces. Nor should we believe that it’s an illusion produced by our tendency to project human attributes onto the world. Rather, agency appears to be an occasional, remarkable property of matter, and one we should feel comfortable invoking when offering causal explanations of what we’re observing. A genuine theory of agency might finally help to clarify what science can say about free will If we want to explain why a volcanic rock is at a particular location, we can tell a causal story in terms of mere mechanics, devoid of any goal: heat – molecular motions – in the deep Earth, along with gravity, produced a convective flow of rock that brought magma to the surface. However, if we want to explain why a bird’s nest is in a particular location, it won’t do to explain the forces that acted on the twigs to deliver them there. The explanation can’t be complete without invoking the bird’s purpose in building the nest. We can’t explain the microscopic details – all those cellulose molecules in the wood having a particular location and configuration – without calling on higher-level principles. A causal story of the nest can never be bottom-up. ‘Agents are causes of things in the Universe,’ says the neuroscientist Kevin Mitchell of Trinity College Dublin. You might say that a blind, mechanical story is still available for the bird’s nest, but it just needs to be a bigger story: starting, say, with the origin of life and the onset of Darwinian evolution among not-yet-living molecules. But no such baroque and fine-grained view will ever avoid the need to talk about the agency of the bird – not if it’s to have the true explanatory power of supplying a ‘why’ to the existence of the nest on this oak branch. Agency is one of the classic examples of an emergent property, which arises from, but is not wholly (and perhaps not at all) accounted for by, the properties of more ‘fundamental’ constituents. A genuine theory of agency might finally help to clarify what science can say about free will. For that’s arguably little more than agency plus consciousness: a capacity to make decisions that influence the world in a purposive manner, and to be aware of ourselves doing it. On this view, the problem of free will adds no new hurdles to the (admittedly daunting) problem of consciousness. Moreover, bringing in agency allows free will to be considered from an evolutionary and a neuroscientific point of view, distinct from philosophical problems of determinism. The crucial point of all this is that agency – like consciousness, and indeed life itself – isn’t just something you can perceive by squinting at the fine details. Nor is it some second-order effect, with particles behaving ‘as if’ they’re agents, perhaps even conscious agents, when enough of them get together. Agents are genuine causes in their own right, and don’t deserve to be relegated to scare quotes. Those who object can do so only because we’ve so far failed to find adequate theories to explain how agency comes about. But maybe that’s just because we’ve failed to seek them in the right places – until now. Coloured scanning electron micrograph of a macrophage white blood cell (purple) engulfing a tuberculosis (Mycobacterium tuberculosis) bacterium (pink). Photo by Science Photo Library (Mycobacterium tuberculosis) bacterium (pink). Photo by Science Photo Library 13 November 2020 |

No comments:

Post a Comment